AI Data Workshops Framework

A Disciplined Approach to Building AI Data Expertise

Introduction

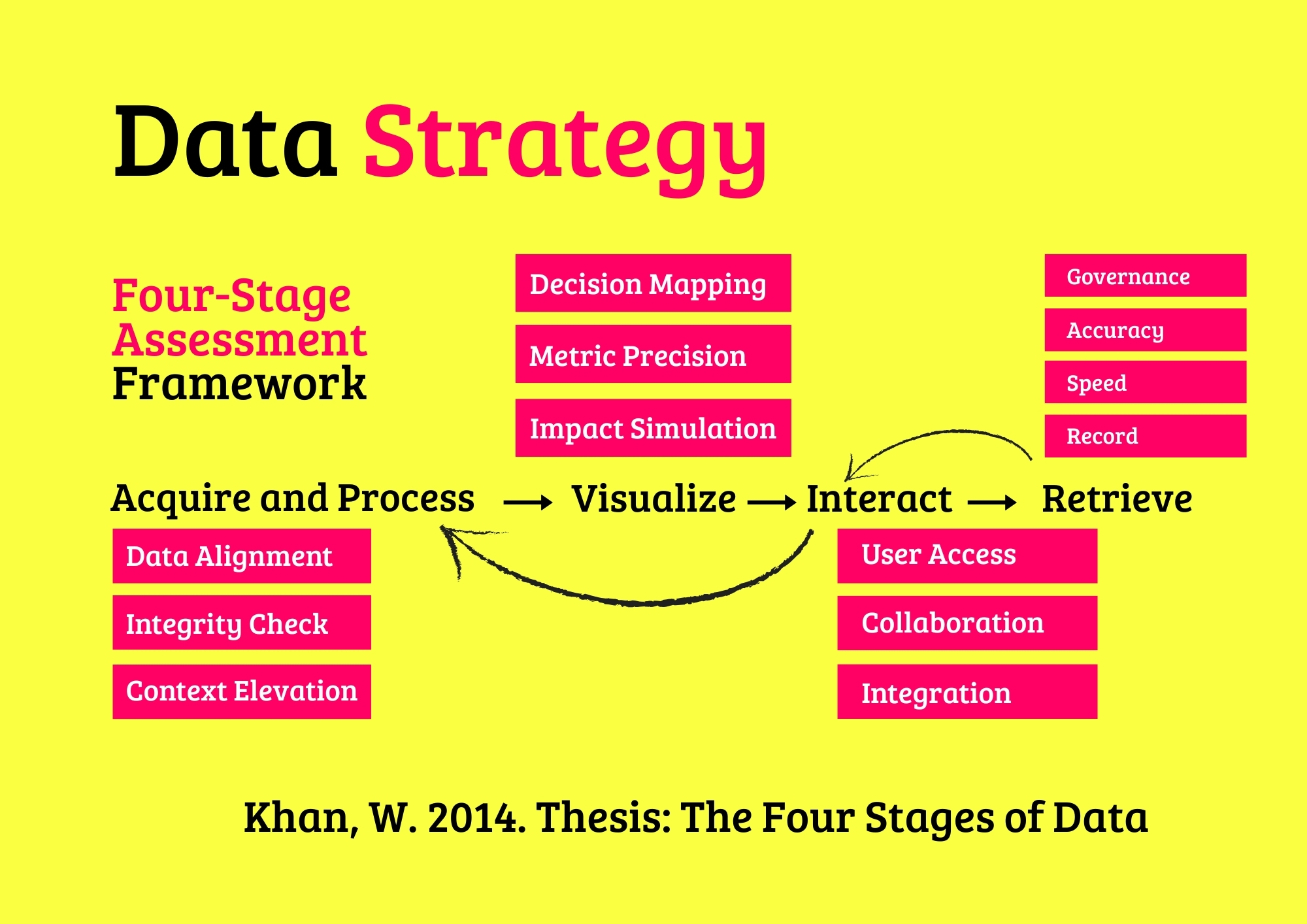

In an era where AI drives innovation, equipping teams with practical data skills is essential for staying competitive. The AI Data Workshops Framework provides a structured methodology for training teams to design, manage, and optimize AI-driven data workflows. Built on our core Four-Stage Platform—Acquire and Process, Visualize, Interact, and Retrieve—this framework empowers participants to build scalable, ethical, and efficient AI solutions tailored to organizational needs.

Designed for diverse audiences—from startups to global enterprises—the workshops integrate principles from AI, data science, and governance standards like DAMA-DMBOK and ISO 27001. By addressing technical proficiency, ethical AI practices, collaboration, and business alignment, the framework ensures teams gain actionable skills that foster innovation, trust, and operational excellence.

Whether a small business exploring AI, a medium-sized firm scaling data capabilities, a large corporate deploying enterprise AI, or a public entity ensuring responsible AI use, these workshops deliver a pathway to AI data mastery.

Theoretical Context: The Four-Stage Platform

Structuring AI Data Learning for Practical Mastery

The Four-Stage Platform—(i) Acquire and Process, (ii) Visualize, (iii) Interact, and (iv) Retrieve—provides a structured lens for mastering AI data workflows. Drawing from AI engineering, data science, and experiential learning principles, this framework emphasizes hands-on practice and iterative skill-building to address real-world challenges. Each stage is explored through sub-layers focusing on technical skills, ethical considerations, collaboration, and innovation.

The framework incorporates approximately 40 AI data practices across categories—Data Preparation, Insight Generation, Model Interaction, and Data Governance—ensuring comprehensive learning. This approach enables participants to navigate AI complexities, delivering solutions that are robust, responsible, and aligned with organizational goals.

Core AI Data Practices

AI data practices are categorized by their objectives, enabling targeted skill development. The four categories—Data Preparation, Insight Generation, Model Interaction, and Data Governance—encompass 40 practices, each tailored to specific AI data needs. Below, the categories and practices are outlined, supported by applications from AI and data science.

1. Data Preparation

Data Preparation practices ensure clean, reliable data for AI, grounded in preprocessing techniques for quality.

- 1. Data Sourcing: Integrates diverse inputs (e.g., APIs).

- 2. Schema Design: Structures data (e.g., JSON schemas).

- 3. Data Cleaning: Removes errors (e.g., Pandas).

- 4. Feature Engineering: Enhances models (e.g., one-hot encoding).

- 5. Data Augmentation: Expands datasets (e.g., synthetic data).

- 6. ETL Pipelines: Automates flows (e.g., Apache Airflow).

- 7. Data Validation: Checks quality (e.g., Great Expectations).

- 8. Metadata Tagging: Tracks lineage (e.g., OpenLineage).

- 9. Cloud Ingestion: Uses AWS/GCP (e.g., BigQuery).

- 10. Normalization: Standardizes data (e.g., z-scoring).

2. Insight Generation

Insight Generation practices create actionable AI outputs, leveraging visualization for clarity.

- 11. Data Exploration: Uncovers patterns (e.g., Jupyter).

- 12. Visualization Tools: Builds dashboards (e.g., Tableau).

- 13. Model Evaluation: Assesses performance (e.g., ROC curves).

- 14. Anomaly Detection: Spots outliers (e.g., Isolation Forest).

- 15. Trend Analysis: Identifies patterns (e.g., time-series).

- 16. Reporting Automation: Generates insights (e.g., Power BI).

- 17. Explainability: Clarifies predictions (e.g., SHAP).

- 18. Performance Metrics: Tracks KPIs (e.g., F1 score).

- 19. Real-Time Monitoring: Observes models (e.g., Grafana).

- 20. Bias Detection: Flags unfair outputs (e.g., Fairlearn).

3. Model Interaction

Model Interaction practices enable dynamic AI management, rooted in orchestration for efficiency.

- 21. Model Training: Builds algorithms (e.g., TensorFlow).

- 22. Hyperparameter Tuning: Optimizes models (e.g., GridSearch).

- 23. Model Deployment: Launches solutions (e.g., Kubernetes).

- 24. API Integration: Connects models (e.g., FastAPI).

- 25. Version Control: Tracks iterations (e.g., MLflow).

- 26. A/B Testing: Compares models (e.g., split testing).

- 27. Scalability Planning: Handles growth (e.g., SageMaker).

- 28. User Feedback: Refines models (e.g., surveys).

- 29. Automated Retraining: Updates models (e.g., pipelines).

- 30. Access Management: Secures models (e.g., IAM).

4. Data Governance

Data Governance practices ensure ethical AI use, grounded in compliance for trust.

- 31. Data Encryption: Secures storage (e.g., AES-256).

- 32. Access Controls: Restricts permissions (e.g., RBAC).

- 33. Audit Logging: Tracks actions (e.g., CloudTrail).

- 34. Anonymization: Protects privacy (e.g., k-anonymity).

- 35. Compliance Checks: Meets GDPR (e.g., audits).

- 36. Policy Creation: Sets rules (e.g., governance frameworks).

- 37. Bias Mitigation: Ensures fairness (e.g., reweighting).

- 38. Retention Policies: Manages lifecycle (e.g., ISO 27001).

- 39. Transparency Reports: Builds trust (e.g., disclosures).

- 40. Ethical Guidelines: Guides AI use (e.g., AI ethics).

The AI Data Workshops Framework

The framework leverages the Four-Stage Platform to teach AI data skills through four dimensions—Acquire and Process, Visualize, Interact, and Retrieve—ensuring alignment with technical, ethical, and business imperatives.

(I). Acquire and Process

Acquire and Process builds data foundations for AI. Sub-layers include:

(I.1) Data Collection

- (I.1.1.) - Connectivity: Integrates sources (e.g., APIs).

- (I.1.2.) - Quality: Ensures clean data.

- (I.1.3.) - Scalability: Handles large datasets.

- (I.1.4.) - Innovation: Uses cloud ingestion.

- (I.1.5.) - Ethics: Avoids biased sources.

(I.2) Data Preparation

- (I.2.1.) - Accuracy: Validates inputs.

- (I.2.2.) - Automation: Streamlines preprocessing.

- (I.2.3.) - Traceability: Tracks lineage.

- (I.2.4.) - Innovation: Leverages feature stores.

- (I.2.5.) - Sustainability: Minimizes compute waste.

(I.3) Pipeline Setup

- (I.3.1.) - Reliability: Ensures stable flows.

- (I.3.2.) - Efficiency: Optimizes processing.

- (I.3.3.) - Compliance: Aligns with regulations.

- (I.3.4.) - Innovation: Uses serverless ETL.

- (I.3.5.) - Inclusivity: Supports diverse data types.

(II). Visualize

Visualize generates actionable AI insights, with sub-layers:

(II.1) Data Exploration

- (II.1.1.) - Clarity: Uncovers patterns.

- (II.1.2.) - Speed: Accelerates analysis.

- (II.1.3.) - Coverage: Includes all datasets.

- (II.1.4.) - Innovation: Uses interactive tools.

- (II.1.5.) - Ethics: Ensures unbiased insights.

(II.2) Model Insights

- (II.2.1.) - Accuracy: Evaluates predictions.

- (II.2.2.) - Automation: Simplifies reporting.

- (II.2.3.) - Trust: Validates outputs.

- (II.2.4.) - Innovation: Uses explainable AI.

- (II.2.5.) - Transparency: Shares findings.

(II.3) Performance Tracking

- (II.3.1.) - Metrics: Monitors KPIs.

- (II.3.2.) - Timeliness: Detects issues fast.

- (II.3.3.) - Reliability: Ensures consistency.

- (II.3.4.) - Innovation: Uses real-time dashboards.

- (II.3.5.) - Ethics: Flags unfair outcomes.

(III). Interact

Interact enables dynamic AI model management, with sub-layers:

(III.1) Model Development

- (III.1.1.) - Efficiency: Streamlines training.

- (III.1.2.) - Accuracy: Optimizes models.

- (III.1.3.) - Scalability: Handles complexity.

- (III.1.4.) - Innovation: Uses AutoML.

- (III.1.5.) - Ethics: Ensures fair models.

(III.2) Model Deployment

- (III.2.1.) - Speed: Launches quickly.

- (III.2.2.) - Reliability: Prevents failures.

- (III.2.3.) - Compliance: Secures models.

- (III.2.4.) - Innovation: Uses MLOps.

- (III.2.5.) - Sustainability: Minimizes resources.

(III.3) Collaboration

- (III.3.1.) - Clarity: Shares results.

- (III.3.2.) - Engagement: Involves teams.

- (III.3.3.) - Trust: Builds confidence.

- (III.3.4.) - Innovation: Uses shared platforms.

- (III.3.5.) - Inclusivity: Supports diverse roles.

(IV). Retrieve

Retrieve ensures secure and ethical data access, with sub-layers:

(IV.1) Data Storage

- (IV.1.1.) - Security: Encrypts data.

- (IV.1.2.) - Scalability: Supports growth.

- (IV.1.3.) - Compliance: Meets GDPR.

- (IV.1.4.) - Innovation: Uses data lakes.

- (IV.1.5.) - Ethics: Protects privacy.

(IV.2) Data Access

- (IV.2.1.) - Speed: Enables fast queries.

- (IV.2.2.) - Accuracy: Ensures correct data.

- (IV.2.3.) - Reliability: Prevents downtime.

- (IV.2.4.) - Innovation: Uses indexing.

- (IV.2.5.) - Transparency: Logs access.

(IV.3) Governance

- (IV.3.1.) - Auditing: Tracks usage.

- (IV.3.2.) - Policies: Enforces rules.

- (IV.3.3.) - Accountability: Assigns ownership.

- (IV.3.4.) - Innovation: Uses automated governance.

- (IV.3.5.) - Ethics: Ensures fairness.

Methodology

The workshops are rooted in AI, data science, and experiential learning, integrating ethical and collaborative principles. The methodology includes:

-

Needs Assessment

Identify team skills and goals via surveys and interviews. -

Customized Curriculum

Design hands-on modules tailored to needs. -

Interactive Delivery

Facilitate workshops with real-world exercises. -

Skill Evaluation

Assess proficiency through projects and feedback. -

Ongoing Support

Provide resources for continuous learning.

AI Data Workshops Value Example

The framework delivers tailored outcomes:

- Startups: Learn lean AI pipelines for rapid prototyping.

- Medium Firms: Scale teams with automated AI insights.

- Large Corporates: Deploy enterprise AI with secure governance.

- Public Entities: Ensure ethical AI with transparent practices.

Scenarios in Real-World Contexts

Small E-Commerce Firm

A retailer struggles with AI adoption. The workshop reveals weak data prep (Acquire and Process: Data Preparation). Action: Train on Pandas cleaning. Outcome: Model accuracy up 15%.

Medium Marketing Agency

A firm lacks AI insights. The workshop identifies poor visualization (Visualize: Data Exploration). Action: Teach Tableau dashboards. Outcome: Campaign insights rise 20%.

Large Healthcare Provider

A provider needs AI scalability. The workshop notes manual deployment (Interact: Model Deployment). Action: Train on MLOps with Kubernetes. Outcome: Deployment time cut by 25%.

Public Agency

An agency seeks ethical AI. The workshop flags weak governance (Retrieve: Governance). Action: Teach GDPR-compliant auditing. Outcome: Stakeholder trust up 30%.

Get Started with Your AI Data Workshop

The framework equips teams with AI skills, ensuring innovation and ethics. Key steps include:

Consultation

Discuss training needs.

Customization

Tailor workshops to goals.

Delivery

Engage with hands-on sessions.

Support

Provide ongoing resources.

Contact: Email hello@caspia.co.uk or call +44 784 676 8083 to empower your team with AI data skills.

We're Here to Help!

Inbox Data Insights (IDI)

Turn email chaos into intelligence. Analyze, visualize, and secure massive volumes of inbox data with Inbox Data Insights (IDI) by Caspia.

Data Security

Safeguard your data with our four-stage supervision and assessment framework, ensuring robust, compliant, and ethical security practices for resilient organizational trust and protection.

Data and Machine Learning

Harness the power of data and machine learning with our four-stage supervision and assessment framework, delivering precise, ethical, and scalable AI solutions for transformative organizational impact.

AI Data Workshops

Empower your team with hands-on AI data skills through our four-stage workshop framework, ensuring practical, scalable, and ethical AI solutions for organizational success.

Data Engineering

Architect and optimize robust data platforms with our four-stage supervision and assessment framework, ensuring scalable, secure, and efficient data ecosystems for organizational success.

Data Visualization

Harness the power of visualization charts to transform complex datasets into actionable insights, enabling evidence-based decision-making across diverse organizational contexts.

Insights and Analytics

Transform complex data into actionable insights with advanced analytics, fostering evidence-based strategies for sustainable organizational success.

Data Strategy

Elevate your organization’s potential with our AI-enhanced data advisory services, delivering tailored strategies for sustainable success.

AI Business Agents in Action

Frequently Asked Questions

What exactly is an AI Business Agent?

An AI Business Agent is a virtual employee that can talk, write and act like a human. It handles calls, chats, bookings and customer support 24/7 in your brand voice. Each agent is trained on your data, workflows and tone to deliver accurate, consistent, and human-quality interactions.

How are AI Business Agents trained for my business?

We train each agent using your documentation, product data, call transcripts and FAQs. The agent learns to recognise customer intent, follow your processes, and escalate to human staff when required. Continuous retraining keeps performance accurate and up to date.

What makes AI Business Agents better than chatbots?

Unlike traditional chatbots, AI Business Agents use advanced language models, voice technology and contextual memory. They understand full conversations, manage complex requests, and speak naturally — creating a human experience without waiting times or errors.

Can AI Business Agents integrate with our existing tools?

Yes. We connect agents to your telephony, CRM, booking system and internal databases. Platforms like Twilio, WhatsApp, HubSpot, Salesforce and Google Workspace work seamlessly, allowing agents to perform real actions such as scheduling, updating records or sending follow-up emails.

How do you monitor and maintain AI Business Agents?

Our team provides 24/7 monitoring, quality checks and live performance dashboards. We retrain agents with new data, improve tone and accuracy, and ensure uptime across all communication channels. You always have full visibility and control.

What industries can benefit from AI Business Agents?

AI Business Agents are already used in healthcare, beauty, retail, professional services, hospitality and education. They manage appointments, take orders, answer enquiries, and follow up with customers automatically — freeing staff for higher-value work.

How secure is our data when using AI Business Agents?

We apply strict data governance including encryption, access control and GDPR compliance. Each deployment runs in secure cloud environments with audit logs and permission-based data access to protect customer information.

Do you still offer data and analytics services?

Yes. Data remains the foundation of every AI Business Agent. We design strategies, pipelines and dashboards in Power BI, Tableau and Looker to measure performance and reveal new opportunities. Clean, structured data makes AI agents more intelligent and effective.

What ongoing support do you provide?

Every client receives continuous optimisation, analytics reviews and strategy sessions. We track performance, monitor response quality and introduce updates as your business evolves — ensuring your AI Business Agents stay aligned with your goals.

Can you help us combine AI with our existing team?

Absolutely. Our approach is hybrid: AI agents handle repetitive, time-sensitive tasks, while your human staff focus on relationship-building and creative work. Together they create a seamless, scalable customer experience.