Insights and Analytics Framework

A Scholarly Approach to Deriving Actionable Knowledge from Data

Introduction

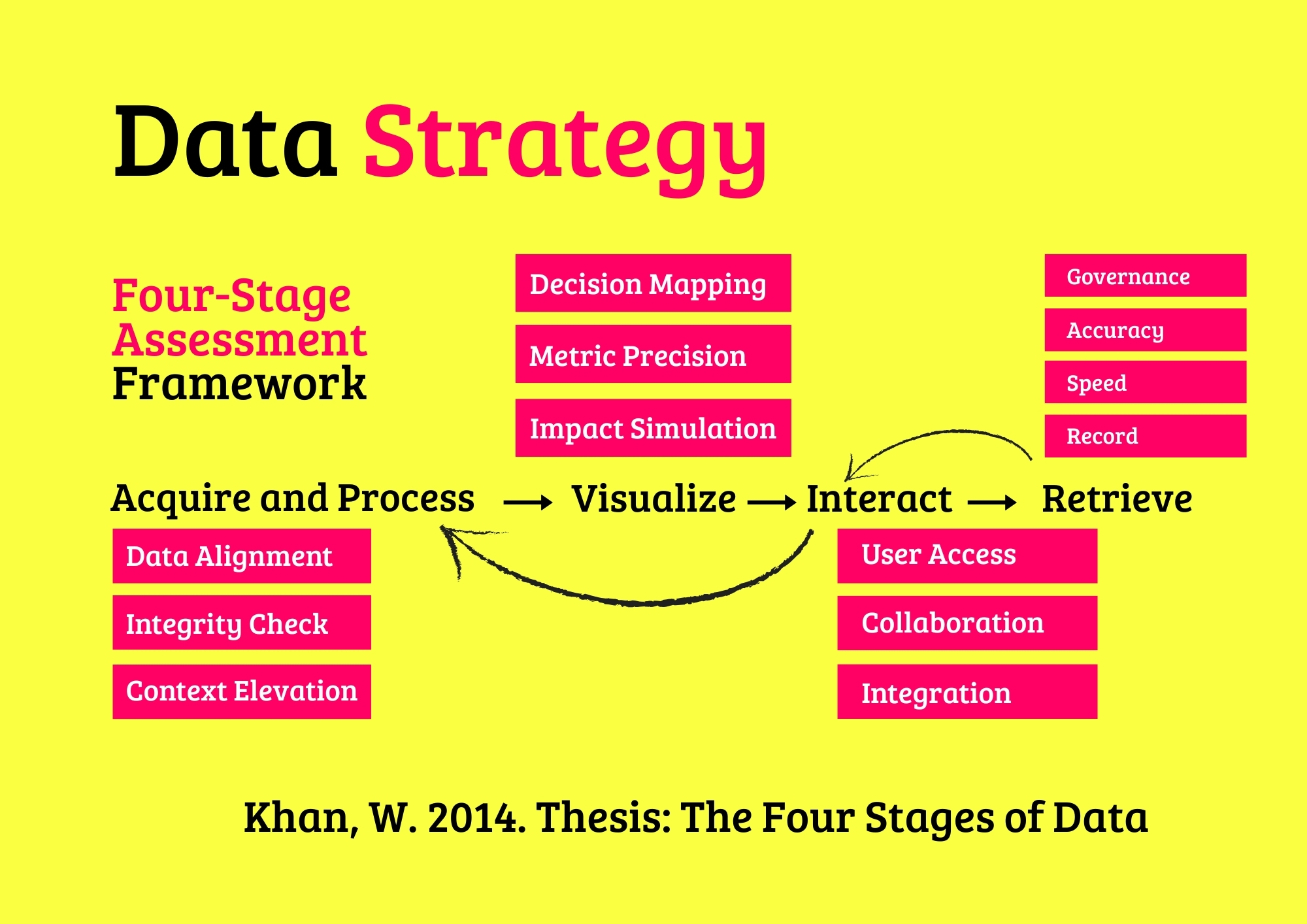

In the contemporary landscape of organizational management, insights and analytics serve as critical mechanisms for distilling complex datasets into actionable knowledge, enabling strategic decision-making, operational efficiency, and innovation. The proliferation of data across sectors necessitates robust analytical frameworks to uncover patterns, anticipate trends, and align with organizational objectives. Inspired by Khan’s (2014) Four Stages of Data framework, the present Insights and Analytics Framework offers a theoretically grounded methodology to evaluate and enhance analytical capabilities, emphasizing the derivation of meaningful insights.

The framework organizes the analytical process into four interconnected layers—(i) Source and Refine, (ii) Interpret, (iii) Engage, and (iv) Archive—each dissected into sub-layers to ensure a comprehensive assessment. Designed for organizations of all scales, from local startups to global institutions, it integrates principles from statistical theory, cognitive science, and ethical governance frameworks like DAMA-DMBOK and ISO 8000, balancing academic rigor with practical utility. By addressing dimensions such as analytical ethics, cultural relevance, sustainability alignment, and technological innovation, the framework empowers organizations to foster stakeholder trust, mitigate biases, and achieve sustainable outcomes.

Whether a small enterprise optimizing local markets, a medium-sized firm scaling operations, a large corporate navigating global challenges, or a public entity ensuring civic accountability, this framework provides a pathway to analytical mastery.

Theoretical Context: The Analytics Cycle

Positioning Insights within the Four Stages of Data

Khan’s (2014) Four Stages of Data framework—(i) Acquire and Process, (ii) Visualize, (iii) Interact, and (iv) Retrieve—offers a robust lens for understanding the data lifecycle. The present framework adapts this structure to focus on analytics, redefining the stages as Source and Refine, Interpret, Engage, and Archive to emphasize insight derivation. Informed by interdisciplinary insights from data science, decision theory, and behavioral economics, the framework prioritizes not only technical precision but also ethical integrity and contextual applicability.

The analytical cycle is operationalized through four dimensions, each evaluated via sub-layers addressing technical, ethical, cultural, and innovation-oriented criteria. By integrating approximately 50 analytical methods across categories—Exploratory, Descriptive, Causal, Predictive, and Optimization—the framework provides a structured approach to address diverse needs, from operational diagnostics to strategic foresight.

This academic foundation enables organizations to navigate data complexities, ensuring insights are robust, inclusive, and aligned with global sustainability goals.

Core Analytical Methods

Analytical methods are systematically classified by their objectives and computational attributes, enabling precise insight derivation. The five categories—Exploratory, Descriptive, Causal, Predictive, and Optimization—encompass 50 methods, each tailored to specific analytical needs. Below, the categories and methods are outlined, supported by applications and insights from statistics, machine learning, and decision theory.

1. Exploratory Methods

Exploratory methods uncover hidden patterns, grounded in unsupervised learning, ideal for hypothesis generation and innovation.

- 1. Cluster Analysis: Groups similar entities (e.g., customer segments).

- 2. PCA: Reduces dimensionality for patterns (e.g., feature extraction).

- 3. Association Rules: Finds item relationships (e.g., product bundles).

- 4. Topic Modeling: Extracts text themes (e.g., feedback insights).

- 5. t-SNE: Visualizes high-dimensional data (e.g., market clusters).

- 6. Anomaly Detection: Identifies outliers (e.g., fraud signals).

- 7. Network Analysis: Maps connections (e.g., supply networks).

- 8. Self-Organizing Maps: Clusters complex data (e.g., behaviors).

- 9. Sentiment Analysis: Gauges opinion trends (e.g., reviews).

- 10. Multidimensional Scaling: Maps relationships (e.g., perceptions).

2. Descriptive Methods

Descriptive methods summarize data to reveal trends, rooted in statistical aggregation, critical for performance reporting.

- 11. Mean/Median Analysis: Quantifies central tendencies (e.g., sales averages).

- 12. Frequency Analysis: Tallies distributions (e.g., user types).

- 13. Cross-Tabulation: Examines relationships (e.g., region vs. sales).

- 14. Trend Plotting: Visualizes temporal patterns (e.g., revenue growth).

- 15. Pareto Analysis: Identifies key drivers (e.g., top issues).

- 16. Heatmap Summaries: Maps metric intensity (e.g., activity peaks).

- 17. KPI Tracking: Monitors indicators (e.g., project goals).

- 18. Distribution Analysis: Shows data spread (e.g., transaction sizes).

- 19. Quartile Analysis: Highlights variability (e.g., performance scores).

- 20. Benchmarking: Compares metrics (e.g., industry standards).

3. Causal Methods

Causal methods investigate cause-effect dynamics, informed by econometric theory, essential for process diagnostics.

- 21. Correlation Analysis: Measures relationships (e.g., cost vs. output).

- 22. Regression Models: Quantifies impacts (e.g., sales drivers).

- 23. ANOVA: Tests group differences (e.g., campaign effects).

- 24. Propensity Scoring: Estimates causal likelihoods (e.g., churn risks).

- 25. Path Analysis: Models variable pathways (e.g., marketing flows).

- 26. Decision Trees: Traces causal decisions (e.g., retention factors).

- 27. Granger Causality: Tests temporal causation (e.g., price vs. demand).

- 28. Factor Analysis: Identifies drivers (e.g., satisfaction causes).

- 29. Mediation Analysis: Explores indirect effects (e.g., loyalty paths).

- 30. Instrumental Variables: Addresses confounding (e.g., policy impacts).

4. Predictive Methods

Predictive methods forecast outcomes, leveraging machine learning, vital for planning and risk mitigation.

- 31. Linear Regression: Predicts continuous outcomes (e.g., revenue).

- 32. Logistic Regression: Predicts binary events (e.g., churn odds).

- 33. Time Series Models: Forecasts trends (e.g., demand curves).

- 34. Random Forests: Enhances prediction via trees (e.g., risk scores).

- 35. Neural Networks: Captures complex patterns (e.g., market shifts).

- 36. Gradient Boosting: Boosts accuracy (e.g., cost predictions).

- 37. ARIMA: Models temporal data (e.g., stock prices).

- 38. Survival Models: Predicts event timings (e.g., failures).

- 39. Bayesian Models: Incorporates priors (e.g., sales forecasts).

- 40. Prophet: Handles seasonality (e.g., traffic trends).

5. Optimization Methods

Optimization methods recommend actions, grounded in operations research, key for resource efficiency.

- 41. Linear Programming: Optimizes allocations (e.g., schedules).

- 42. Monte Carlo: Models uncertainty (e.g., budget risks).

- 43. Decision Analysis: Weighs options (e.g., investments).

- 44. Simulation Models: Tests scenarios (e.g., logistics).

- 45. Markov Models: Predicts transitions (e.g., journeys).

- 46. Game Theory: Models interactions (e.g., pricing).

- 47. Queueing Theory: Optimizes flows (e.g., staffing).

- 48. Constraint Models: Balances trade-offs (e.g., routes).

- 49. Sensitivity Analysis: Tests impacts (e.g., cost shifts).

- 50. Dynamic Programming: Solves sequential decisions (e.g., inventory).

The Analytical Framework

The framework adapts Khan’s (2014) stages to assess analytical strategies through four dimensions—Source and Refine, Interpret, Engage, and Archive—ensuring alignment with technical, ethical, cultural, and strategic imperatives.

(I). Source and Refine

Source and Refine ensures data is reliable for analysis. Sub-layers include:

(I.1) Data Sourcing

- (I.1.1.) - Relevance: Aligns sources with goals (e.g., market data).

- (I.1.2.) - Scalability: Supports data growth.

- (I.1.3.) - Ethical Standards: Complies with GDPR.

- (I.1.4.) - Cultural Fit: Reflects diverse contexts.

- (I.1.5.) - Innovation: Uses IoT for precision.

(I.2) Data Quality

- (I.2.1.) - Accuracy: Ensures error-free data.

- (I.2.2.) - Efficiency: Speeds validation.

- (I.2.3.) - Traceability: Maintains audit trails.

- (I.2.4.) - Sustainability: Tracks emissions data.

- (I.2.5.) - Ethics: Mitigates bias risks.

(I.3) Data Enrichment

- (I.3.1.) - Metadata Depth: Enhances context.

- (I.3.2.) - Automation: Uses AI for efficiency.

- (I.3.3.) - Analytical Utility: Supports modeling.

- (I.3.4.) - Inclusivity: Ensures accessibility.

- (I.3.5.) - Relevance: Reflects regional needs.

(II). Interpret

Interpret derives actionable insights, with sub-layers:

(II.1) Insight Clarity

- (II.1.1.) - Comprehensibility: Ensures clear outputs (e.g., forecasts).

- (II.1.2.) - Timeliness: Delivers rapid insights.

- (II.1.3.) - Impact: Drives outcomes (e.g., cost savings).

- (II.1.4.) - Innovation: Leverages AI models.

- (II.1.5.) - Sustainability: Includes green metrics.

(II.2) Analytical Rigor

- (II.2.1.) - Precision: Aligns with source data.

- (II.2.2.) - Efficiency: Optimizes computation.

- (II.2.3.) - Trust: Builds stakeholder confidence.

- (II.2.4.) - Cultural Fit: Reflects local contexts.

- (II.2.5.) - Ethics: Ensures fair models.

(II.3) Predictive Capacity

- (II.3.1.) - Robustness: Reliable across scenarios.

- (II.3.2.) - Coverage: Models diverse outcomes.

- (II.3.3.) - Alignment: Supports strategic goals.

- (II.3.4.) - Innovation: Uses advanced algorithms.

- (II.3.5.) - Inclusivity: Accessible to all stakeholders.

(III). Engage

Engage fosters interaction with insights, with sub-layers:

(III.1) Access

- (III.1.1.) - Ease: Streamlines interfaces.

- (III.1.2.) - Compatibility: Supports multiple devices.

- (III.1.3.) - Inclusivity: Meets WCAG standards.

- (III.1.4.) - Cultural Fit: Uses multilingual options.

- (III.1.5.) - Innovation: Adopts biometrics.

(III.2) Collaboration

- (III.2.1.) - Sync: Ensures real-time updates.

- (III.2.2.) - Performance: Minimizes latency.

- (III.2.3.) - Adoption: Encourages team use.

- (III.2.4.) - Innovation: Integrates AI tools.

- (III.2.5.) - Ethics: Promotes equitable access.

(III.3) Integration

- (III.3.1.) - Interoperability: Connects platforms.

- (III.3.2.) - Reliability: Reduces errors.

- (III.3.3.) - Scalability: Supports expansion.

- (III.3.4.) - Sustainability: Tracks energy use.

- (III.3.5.) - Cultural Fit: Adapts to regional needs.

(IV). Archive

Archive ensures insights are accessible and secure, with sub-layers:

(IV.1) Accuracy

- (IV.1.1.) - Precision: Ensures correct retrieval.

- (IV.1.2.) - Error Control: Detects inaccuracies.

- (IV.1.3.) - Confidence: Builds trust.

- (IV.1.4.) - Innovation: Uses AI for accuracy.

- (IV.1.5.) - Ethics: Ensures fairness.

(IV.2) Speed

- (IV.2.1.) - Latency: Optimizes retrieval.

- (IV.2.2.) - Indexing: Speeds access.

- (IV.2.3.) - Load: Handles peak demand.

- (IV.2.4.) - Innovation: Uses caching.

- (IV.2.5.) - Sustainability: Minimizes energy use.

(IV.3) Preservation

- (IV.3.1.) - Completeness: Retains full records.

- (IV.3.2.) - Reliability: Prevents data loss.

- (IV.3.3.) - Compliance: Meets regulations.

- (IV.3.4.) - Sustainability: Tracks green metrics.

- (IV.3.5.) - Cultural Fit: Preserves heritage data.

(IV.4) Governance

- (IV.4.1.) - Coverage: Comprehensive policies.

- (IV.4.2.) - Enforcement: Strong mechanisms.

- (IV.4.3.) - Transparency: Builds trust.

- (IV.4.4.) - Inclusivity: Engages stakeholders.

- (IV.4.5.) - Ethics: Upholds fairness.

Methodology

The assessment is grounded in academic rigor, integrating analytics principles, cognitive science, and ethical frameworks. The methodology includes:

-

Data Collection

Gather insights via interviews, audits, and method reviews. -

Readiness Evaluation

Assess clarity, precision, ethics, and innovation. -

Gap Analysis

Identify weaknesses, such as biased models. -

Strategic Roadmapping

Propose actions, from refining sources to ethical governance. -

Iterative Review

Monitor and adapt for continuous improvement.

Analytics Value Example

The framework delivers tailored value:

- Small Firms: Optimize markets with clustering, ensuring ethical sourcing.

- Medium Enterprises: Scale with predictive models, tracking sustainability.

- Large Corporates: Enhance global strategies with AI-driven insights.

- Public Entities: Improve accountability with transparent analytics.

- Startups: Drive innovation with optimization methods.

Scenarios in Real-World Contexts

Small Retail Firm

A retailer seeks market optimization. The assessment reveals unclear insights (Interpret: Insight Clarity). Action: Deploy cluster analysis. Outcome: Sales rise by 10%.

Medium-Sized Manufacturer

A firm aims to reduce inefficiencies. The assessment notes weak causal models (Interpret: Analytical Rigor). Action: Use regression analysis. Outcome: Costs drop by 12%.

Large Corporate

A multinational seeks strategic alignment. The assessment highlights siloed analytics (Engage: Integration). Action: Integrate platforms. Outcome: Efficiency gains of 15%.

Public Entity

A municipality strives for transparency. The assessment identifies slow archival access (Archive: Speed). Action: Implement caching. Outcome: Trust rises by 18%.

Get Started with Your Insights and Analytics Assessment

The framework aligns analytics with objectives, ensuring precision and ethics. Key steps include:

Consultation

Explore analytical needs.

Assessment

Evaluate methods comprehensively.

Reporting

Receive gap analysis and roadmap.

Implementation

Execute with iterative reviews.

Contact: Email hello@caspia.co.uk or call +44 784 676 8083 to optimize analytics strategies.

We're Here to Help!

Inbox Data Insights (IDI)

Turn email chaos into intelligence. Analyze, visualize, and secure massive volumes of inbox data with Inbox Data Insights (IDI) by Caspia.

Data Security

Safeguard your data with our four-stage supervision and assessment framework, ensuring robust, compliant, and ethical security practices for resilient organizational trust and protection.

Data and Machine Learning

Harness the power of data and machine learning with our four-stage supervision and assessment framework, delivering precise, ethical, and scalable AI solutions for transformative organizational impact.

AI Data Workshops

Empower your team with hands-on AI data skills through our four-stage workshop framework, ensuring practical, scalable, and ethical AI solutions for organizational success.

Data Engineering

Architect and optimize robust data platforms with our four-stage supervision and assessment framework, ensuring scalable, secure, and efficient data ecosystems for organizational success.

Data Visualization

Harness the power of visualization charts to transform complex datasets into actionable insights, enabling evidence-based decision-making across diverse organizational contexts.

Insights and Analytics

Transform complex data into actionable insights with advanced analytics, fostering evidence-based strategies for sustainable organizational success.

Data Strategy

Elevate your organization’s potential with our AI-enhanced data advisory services, delivering tailored strategies for sustainable success.

AI Business Agents in Action

Frequently Asked Questions

What exactly is an AI Business Agent?

An AI Business Agent is a virtual employee that can talk, write and act like a human. It handles calls, chats, bookings and customer support 24/7 in your brand voice. Each agent is trained on your data, workflows and tone to deliver accurate, consistent, and human-quality interactions.

How are AI Business Agents trained for my business?

We train each agent using your documentation, product data, call transcripts and FAQs. The agent learns to recognise customer intent, follow your processes, and escalate to human staff when required. Continuous retraining keeps performance accurate and up to date.

What makes AI Business Agents better than chatbots?

Unlike traditional chatbots, AI Business Agents use advanced language models, voice technology and contextual memory. They understand full conversations, manage complex requests, and speak naturally — creating a human experience without waiting times or errors.

Can AI Business Agents integrate with our existing tools?

Yes. We connect agents to your telephony, CRM, booking system and internal databases. Platforms like Twilio, WhatsApp, HubSpot, Salesforce and Google Workspace work seamlessly, allowing agents to perform real actions such as scheduling, updating records or sending follow-up emails.

How do you monitor and maintain AI Business Agents?

Our team provides 24/7 monitoring, quality checks and live performance dashboards. We retrain agents with new data, improve tone and accuracy, and ensure uptime across all communication channels. You always have full visibility and control.

What industries can benefit from AI Business Agents?

AI Business Agents are already used in healthcare, beauty, retail, professional services, hospitality and education. They manage appointments, take orders, answer enquiries, and follow up with customers automatically — freeing staff for higher-value work.

How secure is our data when using AI Business Agents?

We apply strict data governance including encryption, access control and GDPR compliance. Each deployment runs in secure cloud environments with audit logs and permission-based data access to protect customer information.

Do you still offer data and analytics services?

Yes. Data remains the foundation of every AI Business Agent. We design strategies, pipelines and dashboards in Power BI, Tableau and Looker to measure performance and reveal new opportunities. Clean, structured data makes AI agents more intelligent and effective.

What ongoing support do you provide?

Every client receives continuous optimisation, analytics reviews and strategy sessions. We track performance, monitor response quality and introduce updates as your business evolves — ensuring your AI Business Agents stay aligned with your goals.

Can you help us combine AI with our existing team?

Absolutely. Our approach is hybrid: AI agents handle repetitive, time-sensitive tasks, while your human staff focus on relationship-building and creative work. Together they create a seamless, scalable customer experience.